If you find race-specific medicine surprising, wait until you learn that many doctors in the United States still use an updated version of a diagnostic tool that was developed by a physician during the slavery era, a diagnostic tool that is tightly linked to justifications for slavery.

Dorothy Roberts

University of Pennsylvania

Historical and structural inequalities are closely tied to bias. Historical power imbalances have had profound consequences on certain racial and ethnic groups: early deaths, unnecessary disabilities, and enduring injustices and inequalities (Global Health 50/50, 2020).

What is Colonial Medicine?

Colonial medicine has been a critical element of colonialism. It has historically focused on protecting European health, maintaining military superiority, and supporting extractive industries*. Colonial medicine emphasized malaria, yellow fever, sleeping sickness, and other specific diseases, focusing on bacteriological approaches to disease control (Global Health 50/50, 2020).

*Extractive industries: companies and activities involved in the removal of resources for processing and sale such as oil, sand, rock, gravel, metals, minerals, and other material.

While colonial physicians and scientists made substantial contributions to medicine, they worked almost entirely on health issues that were unique to the colonies. In doing so, they established a focus on infectious diseases exclusive to the colonies—an interest that has been inherited by global health today (Global Health 50/50, 2020).

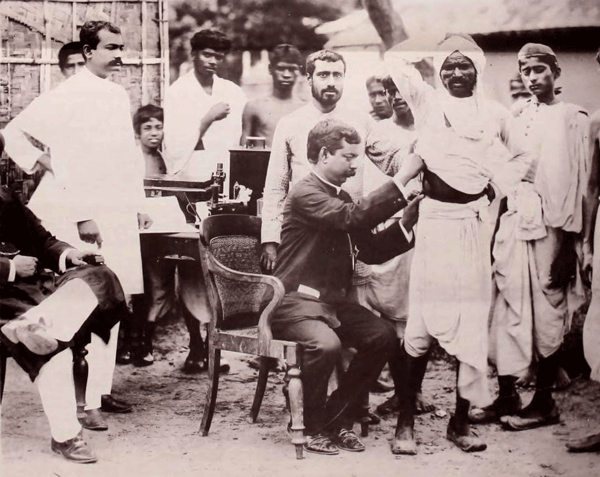

Anti-cholera vaccination, Calcutta, 1895. Wellcome Collection. Public domain.

In the colonial and post-colonial periods, European and American special interest groups have followed this pattern, using their leverage to shape a continuing focus on diseases through, for example, public-private partnerships for drug development. Virtually all major pharmaceutical manufacturers either produced or have evolved from firms that supplied medicines to sustain colonialism (Global Health 50/50, 2020).

The Development of the U.S. Healthcare System

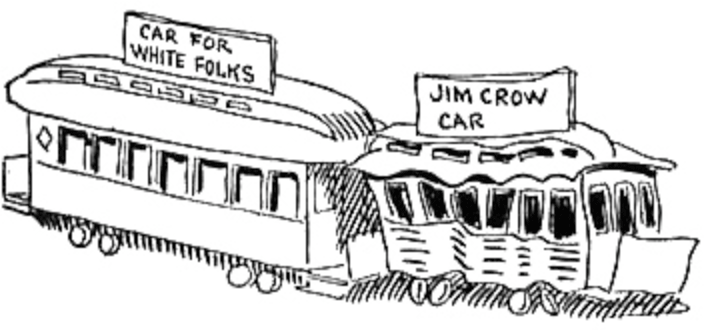

The U.S. healthcare system began to be developed in the mid-1800s, partly due to the impact of the Civil War. With the end of slavery and the onset of the Jim Crow era*, racism implicitly and explicitly became a part of the structuring and financing of the U.S. healthcare system (Yearby, Clark, & Figueroa, 2022). Despite Jim Crow's legal pretense that the races be “separate but equal” under the law, non-whites were given inferior facilities and treatment.

*Jim Crow era: Statutes and ordinances established in 1874 to separate the White and Black races in the American South. Hospitals, and orphanages, and prisons were segregated as were schools and colleges.

Jim Crow had a tremendous impact of the structure and functioning of the U.S. healthcare system. For more than a century following the Civil War, non-white people experienced a lack of services, discrimination, and the adverse effects of a healthcare system that provided inferior healthcare to minorities, people of color, and Indigenous people.

Separate but equal became the law in 1896, when the Supreme Court ruled in Plessy v. Ferguson that state segregation laws for public facilities were legal. In practice, the services and facilities for Blacks and other minorities were consistently inferior, underfunded, and more inconvenient as compared to those offered to Whites—or did not exist at all. And while segregation was literal law in the South, it was also practiced in the northern United States via housing patterns enforced by private covenants, bank lending practices, and job discrimination, including discriminatory labor union practices (Howard University, 2018).

1904 caricature of “White” and “Jim Crow” rail cars by John T. McCutcheon. Source: Wikipedia. Public domain.

The National Labor Relations Act of 1935 expanded rights for workers, resulting in higher wages, benefits, and health insurance for those represented by unions. The Act did not apply to service, domestic, and agricultural workers, and it allowed unions to discriminate against racial and ethnic minorities employed in industries such as manufacturing. These workers were more likely to be relegated to low-wage jobs that failed to provide health insurance (Yearby, Clark, & Figueroa, 2022).

In the mid-1940s, the federal government began to fund construction of public hospitals and long-term care facilities. Although the funding included a mandate that healthcare facilities be made available to all without consideration of race, it allowed states to construct racially separate and unequal facilities (Yearby, Clark, & Figueroa, 2022).

In 1960, federal programs such as the Medical Assistance for the Aged program (Kerr-Mills), extended medical benefits to a new category of “medically indigent” adults aged 65 or over. Eligibility and benefit levels were left largely to the States, meaning the poorer the state, the poorer the program. By linking medical assistance for the aged with public assistance, Kerr-Mills inadvertently created a program associated with social stigma and institutional biases. Nevertheless, Kerr-Mills served as an important precursor to Medicaid.

The Impact of Slavery

The systemic discrimination that has impacted Black health dates to the first ships carrying enslaved Africans across the Atlantic. The colonial narrative of hierarchy and supremacy exists to this day, and has translated, centuries later, into gaping health disparities.

Meghana Keshavan, STAT Health, June 9, 2020

It has been many years since the abolition of slavery, yet its legacy remains. Social injustices related to historical trauma, institutional racism, and structural inequities continue to negatively impact the health of many low income and minority communities. These mechanisms contribute to high disease burdens, difficulties accessing healthcare, and a lack of trust in the healthcare system (Culhane-Pera et al., 2021).

In American medical research, there has been a long history of unethical treatment of Black research subjects. Cases of medical malfeasance and malevolence have persisted, even after the establishment of the Nuremburg code* (Jones, 2021).

*Nuremburg code: A set of medical ethical principles developed after World War II and subsequent trials for crimes against humanity.

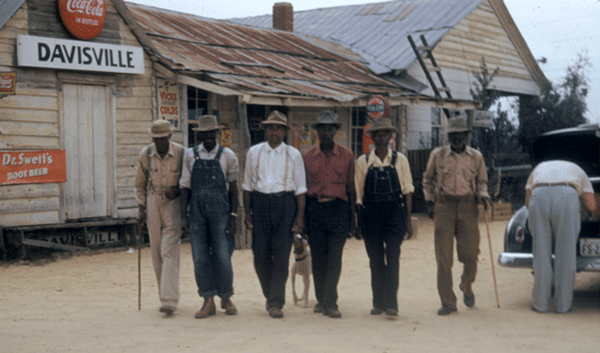

Medical ethicist Harriet A. Washington details some of the most egregious examples in her book “Medical Apartheid.” In the notorious Tuskegee Study of Untreated Syphilis in the Negro Male, the government misled Black male patients to believe they were receiving treatment for syphilis when, in fact, they were not. That study went on for a total of 40 years, continuing even after a cure for syphilis was developed in the 1940s (Jones, 2021).

Group of men who were test subjects in the Tuskegee Syphilis Experiments. Source: Wikimedia. Public domain.

Perhaps less widely known are the unethical and unjustified experiments J. Marion Sims performed on enslaved women in the United States. in the 1800s, the experiments earned Sims the nickname the “father of modern gynecology.” Sims performed experimental surgery on enslaved women without anesthesia or even the basic standard of care typical for the time. Historian Deirdre Cooper Owens elaborates on this case and many other ways Black women’s bodies have been used as guinea pigs in her book “Medical Bondage” (Jones, 2021).

J. Marion Sims experimented on Anarcha, a 17-year-old slave, over 30 times. His decision not to give anesthesia was based on the racist assumption that Black people experience less pain than their white peers—a belief that persists among some medical professionals today (Jones, 2021).

Impact of Colonization and Slavery on Indigenous People of the Americas

Early travelers to the American West encountered unfree people nearly everywhere they went—on ranches and farmsteads, in mines and private homes, and even on the open market, bartered like any other tradeable good. Unlike on southern plantations, these men, women, and children weren’t primarily African American; most were Native American. Tens of thousands of Indigenous people labored in bondage across the western United States in the mid-19th century.

Kevin Waite

The Atlantic, November 25, 2021

When European explorers arrived in the Americas in the late 1400s, they introduced diseases for which the Indigenous peoples had little or no immunity. The impact was rapid and deadly for people living along the coast of New England and in the Great Lakes regions. In the early 1600s, smallpox alone (sometimes intentionally introduced) killed as many as 75% the Huron and Iroquois people.

The impact of colonization on the health of Indigenous peoples has been atrocious. It has led to loss of cultural practices, language, traditional medicines, and ways of knowing and being. With forced assimilation, health inequities grew while traditional healing practices were forcibly replaced by “patriarchal healthcare systems” (Coen-Sanchez et al., 2022).

The exploitation and loss of tribal lands reflects the lasting impact of historical racist policies. Indigenous Peoples have been exposed to racist reproductive policies, limited access to reproductive health services, and environmental contamination (Yellow Horse et al., 2020).

The Health Impact of Extractive Industries

Extractive industries have had a lasting impact on the health and well-being of Indigenous communities worldwide. Even during normal operations of mining and energy projects, community health is often a concern. During the COVID pandemic, conflicts over the implications of extractive industries for community health intensified. The physical and mental health implications of environmental contamination, potential new diseases acquired from transient workers, disruptions to community relationships, and changes to local lifestyles have become community concerns (Bernauer and Slowey, 2020).

Did You Know. . .

Historically, uranium extraction has significantly affected some Indigenous communities. There is substantial evidence that living near an abandoned uranium mine is associated with reproductive damage. The presence of abandoned uranium mines is not only associated with environmental contamination, but also closely related to structural inequalities such as a higher percentage of households without complete plumbing and access to safe water (Yellow Horse et al., 2020).

The Impact of COVID-19 on Native American Communities

The COVID-19 pandemic exposed disparities in Asian, Black, Latinx, and Indigenous communities related to policies and practices that resulted in members of these communities living in multigenerational households, having greater reliance on public transportation, and having more front-line occupations with higher risk of COVID-19 exposure (Diop et al., 2021).

COVID-19 case and death rates among Native Americans communities fluctuated through the course of the pandemic. Throughout the U.S., counties with higher population proportions of Native Americans had much higher death rates in May and June of 2021. Death rates tended to be lower after vaccine boosters became available (Bergmann et al., 2022).

Considering the role of racism in understanding how COVID-19 has disproportionately affected Indigenous communities is critically important, particularly structural factors such as lack of access to safe water for frequent hand washing and a shortage of personal protective equipment (Yellow Horse et al., 2020).

Although rates varied depending on which COVID-19 surge was occurring, American Indian, Alaska Native and Native Hawaiian persons had the highest incident cases and deaths per 100,000 population of any race/ethnicity in the United States. Throughout the country, Native Americans were more likely to be hospitalized and die than White patients. This remained true over every “surge” or sharp increase in cases (Bongiovanni et al., 2022).

San Francisco State University Associate Professor of Biology Wilfred Denetclaw, a Navajo man raised in the traditional Navajo way of life and teachings, shared the story of the Navajo Nation’s resilience during the pandemic. The Navajo government closed reservation borders during the height of the pandemic, instigated weekend curfews, and reached out to the University of California San Francisco and Health, Equity, Action, Leadership for health worker reinforcements.

When vaccines became available, Navajo president Jonathan Nez advocated strongly for vaccinations using Navajo culture to emphasize the importance of vaccination as a protective shield against COVID-19. The community took the message to heart and now vaccination rates are among the highest across the United States (Rajan, 2022).

At the start of the pandemic, the Navajo Nation had the highest COVID-19 infection rate anywhere in the United States. Intergenerational trauma, social isolation, lack of transportation, crowded multigenerational households, shortages in funding and supplies, widespread poverty, and an overworked healthcare workforce contributed to this disparity.

Now, the Navajo Nation is one of the safest places in America, due to its emphasis on collective responsibility, high vaccination rates, and public health measures. According to Jonathan Nez, Navajo Nation president, “While the rest of the country were saying no to masks, no to staying home, and saying you’re taking away my freedoms, here on Navajo, it wasn’t about us individually,” he said. “It was about protecting our families, our communities and our nation.”

Rachel Cohen, How the Navajo Nation Beat Back COVID

The Nation, January 14, 2022